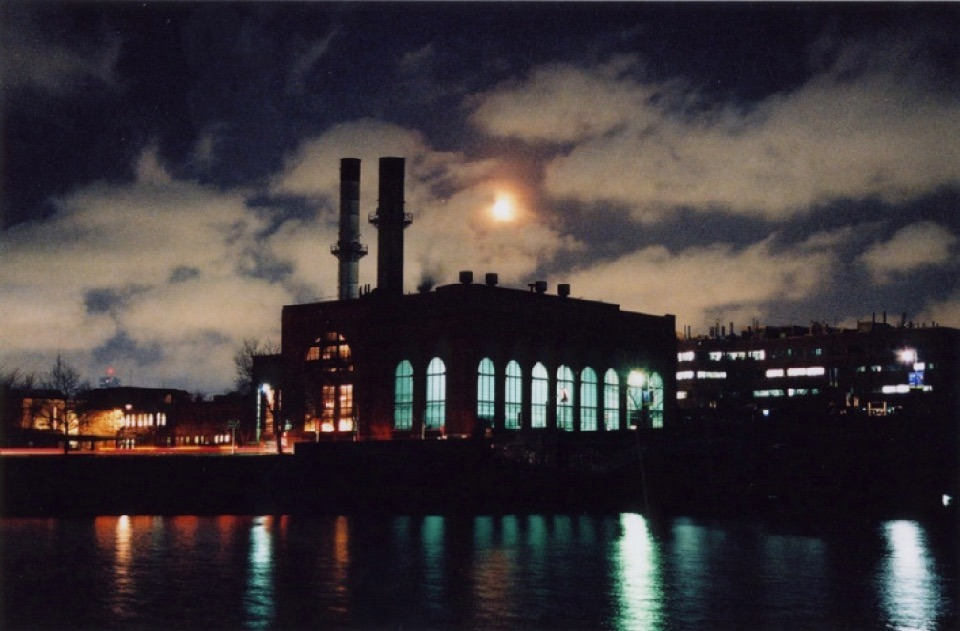

(BlackStone Station de nuit; http://infraobservatory.com/ Photo: Jim Muhk)

Tim Hwang is director of the Harvard–Massachusetts Institute of Technology (MIT) Ethics and Governance of AI Initiative, a philanthropic project working to ensure that machine learning and autonomous technologies are researched, developed, and deployed in the public interest. Previously, he was at Google, where he was the company’s global public policy lead on artificial intelligence (AI), and was responsible for managing outreach to government and civil society on issues surrounding the social impact of the technology. His current research focuses on the geopolitical dimensions of computational power, and the impact of postwar home construction on children’s folk games.

He spoke with Deena Chalabi, former Barbara and Stephan Vermut Associate Curator of Public Dialogue, for Public Knowledge in October 2018.

Deena Chalabi: Tell us about your work with Data and Society.

Tim Hwang: My work at Data & Society was a research program thinking about the implications of machine intelligence that looked at how people think about autonomy. So, the project took a more anthropological or ethnographic approach to the technology: how do people think about AI, and how does that influence their interactions with technology?

This ended up being a two-year research project with my collaborator, Madeleine C. Elish called Intelligence and Autonomy. We worked on a number of different issues. I think one of the more interesting ones was around the notion of what we called “moral crumple zones.” So you’ve got a historical pattern in automation, where automation continues to take up more and more of the task of doing something, but you have a human operator present who has less and less control over the system. And so when things go wrong, basically the human functions as a scapegoat for what went wrong with the technology. That’s something that has happened in the past, and we think it’ll continue to occur as artificial intelligence continues to be introduced in a whole range of domains.

DC: That’s fascinating. Now, as you know, Public Knowledge looks at urban change in addition to questions around how knowledge is changing in the digital age. I also have heard that you started something called the Bay Area Infrastructure Observatory. What is that?

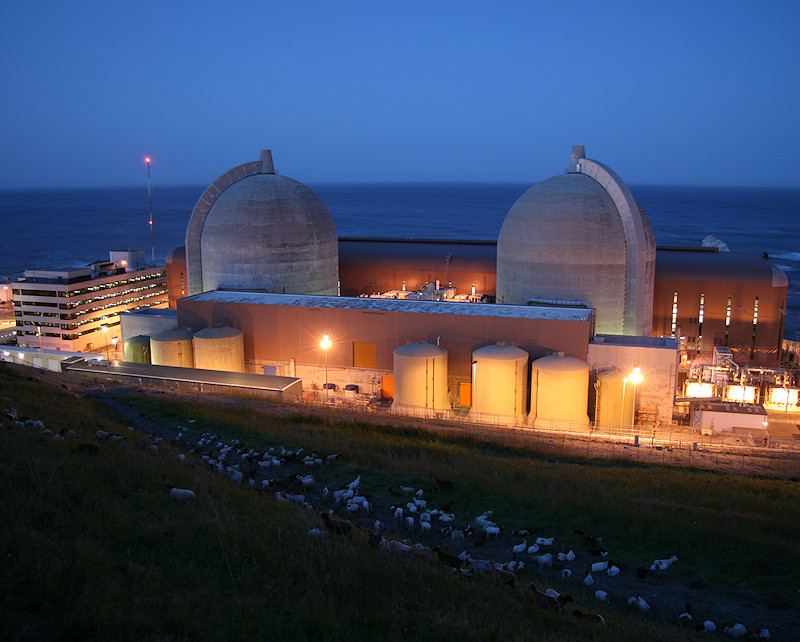

TH: The Bay Area Infrastructure Observatory was a project that I started with a number of friends a few years back. We wanted to see if it was possible to take a tour of a nuclear power plant. It turns out you can’t get into a nuclear power plant to take a tour unless you’re part of an organization. So we came up with this fictional organization with a fictional logo: the Bay Area Infrastructure Observatory. It turns out that allowed us to get in and take a tour and we were like, “All right. That was great. Well, what are all the other different places we can go to?” So this first visit launched a series of field trips to observe the infrastructure of the Bay Area. We went to the Santa Clara Water Treatment Plant. We went to the new Bay Bridge span before it opened up. We went to the San Francisco Emergency Response Command Center. It eventually led to a book called The Container Guide, which was a field guide to spotting shipping containers in the wild.

(Diablo Canyon Nuclear Power Plant in Avila Beach, CA; http://infraobservatory.com/ Photo: Jim Zim)

DC: Were there any other outcomes or conversations around policy or around how these infrastructures might be adapted or changed or at least engaged with publicly in any other kinds of ways?

TH:One of the intriguing outcomes was that a lot of people told me that they came for the fun field trip but stayed for the interesting questions about the politics of all this, like: Who makes choices around this technology? Where’s our water going to continue to come from? How are infrastructures designed? And there’s a number of people who really got into local politics as a result of the process.

DC: OK, changing directions again (because you have so many projects we could talk about), what is the Zuckerberg thing?

TH: About a year or two ago, I was having some drinks with a friend, and I sheepishly admitted to this friend that I had been collecting compelling press images in which Mark Zuckerberg appears. And my friend was like, “Oh, man, I actually do that, as well.” And we had this fun experience talking about and sharing the various Zucks that we had collected. And I was like, “There’s so much to talk about here.” So, the easiest way of collecting some good thinking on it was to spin up an academic journal. So we started a periodical called the California Review of Images of Mark Zuckerberg. It’s an intermittently produced academic journal that exists online at zuckerbergreview.com. It’s a bunch of researchers who are writing media studies and art critique essays about the various images in which Mark Zuckerberg appears. It’s been a fun project. One of our articles later was in an issue of Harper’s. So I like to think we’re really giving rise to the burgeoning field of Zuckerberg studies.

(Homepage of zuckerbergreview.com; http://zuckerbergreview.com/ Photo: Tim Hwang)

DC: Who do you think has been most engaged with it?

TH: The kinds of responses I get from the projects that I’m happiest about are ones that run along the lines of, “At first I thought this was a joke, but it actually turned out to be extremely deep.” And I think that’s been the reception of the project. A lot of people have been like, “Oh, wow, you actually went through the effort of commissioning all these essays around Mark Zuckerberg.” I think people generally liked it. My current ambition is to see whether I get the issues actually published as a bound volume, because many of these actually work perfectly as a coffee table book.

DC: Going back to your day-to-day work, one of the things that I want to ask about is your thoughts on the degree to which private entities are taking over the public good in a sense—supposedly working in the public interest, but to what extent is that happening? And also, as we move into this new phase of AI becoming even more important, how are people thinking about machine learning and the public interest?

TH: Private entities have and are playing the leading role in our development of AI. That’s for a number of reasons: AI requires a large amount of data to pull off correctly. It requires a large amount of computing power to pull off correctly. And it turns out that the entities that actually have the access to precisely those resources are existing private platform players like Facebook and Google.

Between a lot of hiring that has really created a big pipeline between academia and industry working on this stuff, I think it’s a really big concern that this essential technology is being designed, deployed, modified all within the confines of fairly opaque entities. The project that I help lead—the Ethics and Governance in AI Initiative—is trying to think about how we change that state of play. So the question ends up being, how can you influence the intentions or behavior of these companies to make them accountable to the public? We’re attacking that problem from a number of different directions. One approach that I am most bullish on focuses on shaping the norms of people who actually design these technologies and advance the research. There actually tends to be a lot of debate and a lot of friction between various constituencies within these companies. One of the groups that is most concerned about the ethical deployment of the technology is the researchers themselves. So that’s one of the levers that we’ve thought a lot about: how do we rally people who are advancing the state of the art in the tech to change how the technology is used and deployed by the companies that employ them?

(Fish cultivation ponds running off of geothermal waste heat in Iceland; http://infraobservatory.com/ Photo: Luis Callejas)

DC: Right. And I was going to ask you about that. Where does the law or government fit into this conversation?

TH: I’m a big believer in the ability for public institutions to play a role in this. And I think we are actually seeing this, just not very much at the federal level. New York has put together a council on algorithmic transparency and accountability, and a lot of other cities are implementing similar initiatives, as well. To that end, I actually wonder whether some of the action that we’re going to see really won’t be on this national level but will really happen at the level of states and municipalities. There’s a much longer, complex conversation we could have about places like Europe.

DC: In terms of people who are doing research or advocacy around asking for more either transparency or thinking about ethics and governance regarding data, where do you see the best work being done?

TH: I’m really excited about a number of organizations in the space. One is actually based right in San Francisco: the Human Rights Data Analysis Group, or HRDAG. HRDAG is an amazing team of data scientists who do investigative work around human rights. And there’s a researcher there by the name of Kristian Lum, who’s doing really amazing work thinking about the use of automated systems in the criminal justice system. They do really fascinating, cutting-edge work that is much needed.

(BAIO Field Day 2013 trip to Stanford Amateur Radio Club (W6YX) to bounce radio signals off of the moon; http://infraobservatory.com/post/53689506814/w6yx-field-day Photo: Tim Hwang)

DC: So, final question: who (else) is thinking in the most interesting ways about how we can be solving today’s or tomorrow’s social problems?

TH: I’ll mention two people I think are doing some amazing work. Kat Lo is a researcher who has done a lot of work thinking about how online platforms can deal with problems around harassment. In effect, she’s involved in the hard work of figuring out how we do a good job architecting our online social spaces in ways that are safer and more robust, more sustainable and more inclusive.

Another person who is great is Marie-Therese Png, who is an AI researcher currently at Oxford. She thinks about how cross-cultural, intersectional norms could be embedded in technologies like machine learning. In effect, she’s investigating the question of how we might go about decolonizing AI. This is a fascinating project and it’s very much needed.